About Me

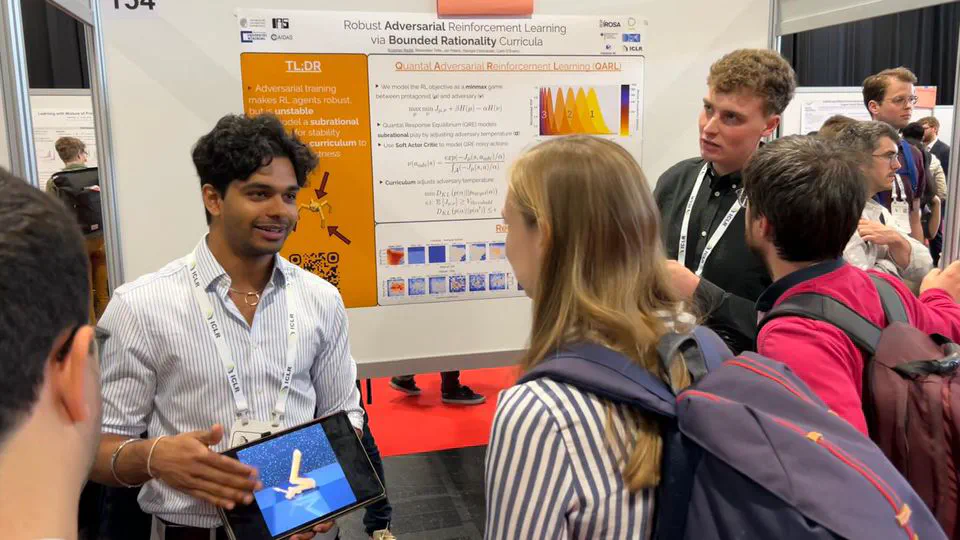

I am a PhD student in reinforcement learning at the LiteRL group at the Technical University of Darmstadt in partnership with the Intelligent Autonomous Systems lab and Hessian.AI, supervised by Professor Carlo D’Eramo 🎓

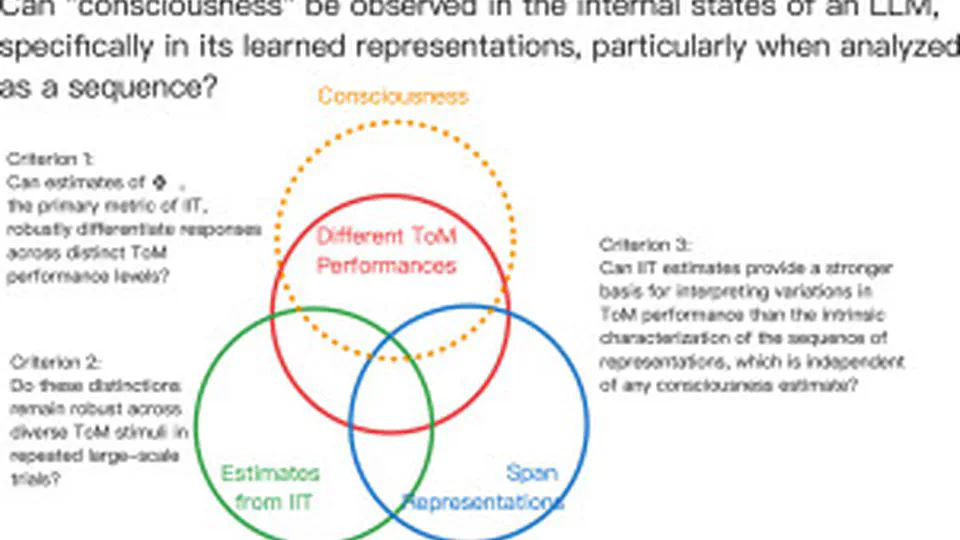

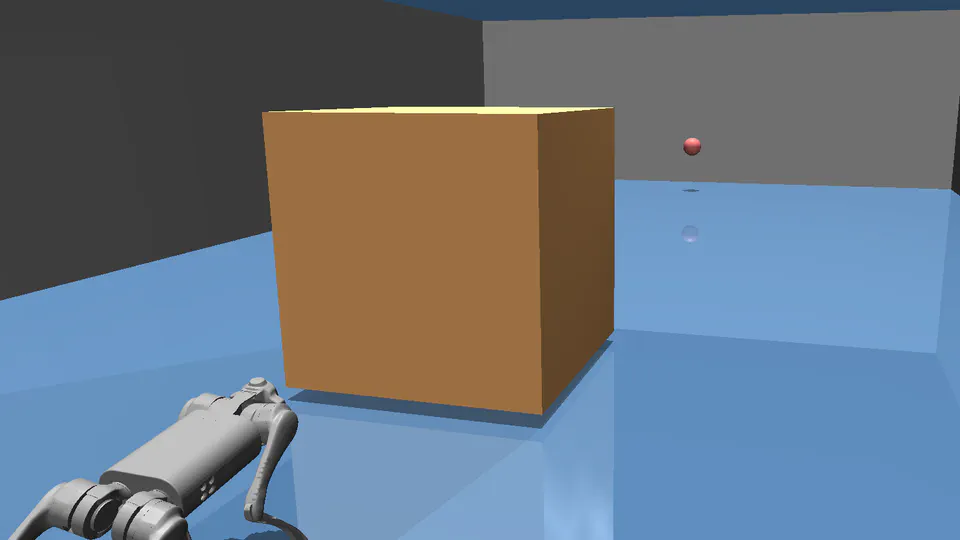

I am interested in developing sample-efficient techniques in deep multi-agent reinforcement learning using insights from applied mathematics and game theory 🕹️

I am a continuum hypothesis skeptic, a mereological universalist, and a Collatz conjecture supporter.

- Reinforcement Learning

- Game Theory

- Ethics and Philosophy

PhD in Computer Science

Technical University of Darmstadt, Germany

MEng & BA in Information and Computer Engineering

University of Cambridge, United Kingdom

Experience

Machine Learning Research Engineer

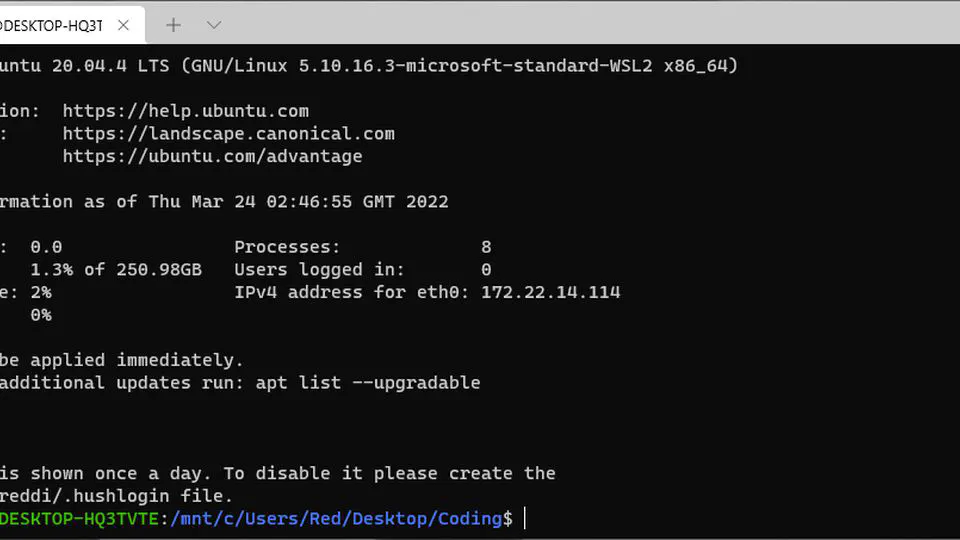

Arm, Cambridge, United Kingdom- Developed an open-source tool (ML Inference Advisor) to optimise neural networks for inference on Arm GPUs using Python (PyTorch, Tensorflow, Numpy, Jupyter, Pandas), C++, Kubernetes, & Docker.

- Achieved a 20% boost in GPU inference throughput by analyzing TensorFlow operator efficiency using deep learning clustering and pruning techniques

- Enhanced IoT device performance by 12% by benchmarking over 30 silicon-on-chip devices using Python, Jenkins CI, SQL, & Kubernetes

Education

PhD in Computer Science

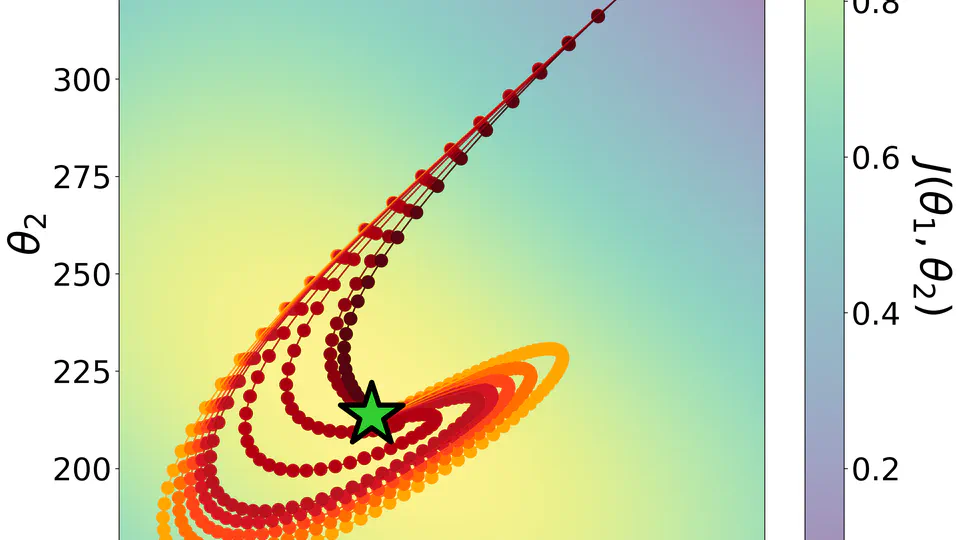

Technical University of Darmstadt, GermanyMy focus is on developing sample-efficient algorithms for exploration, coordination, and communication in multi-agent reinforcement learning using insights from applied mathematics and game theory.

I believe bridging the gap between practical deep learning and theoretical models of stochastic optimisation is essential for scaling RL in real-world settings.

I build algorithms which exhibit high performance in high-dimensional environments while providing mathematical insights using probability theory, linear algebra, calculus, & functional analysis.

MEng & BA in Information and Computer Engineering

University of Cambridge, United KingdomRead Thesis- Grade: Distinction (GPA 4.0 Equivalent)

- Received the David Thompson prize for academic achievement

Communities

- I am a teaching assistant for the Reinforcement Learning course at TU Darmstadt 🤖

- Former Secretary of the Cambridge University Libertas Society, Cambridge’s first free speech society ✒️

Interesting links

- Don’t ask to ask, just ask

- The XY Problem

- The Life of Jos Claerbout

- The Worst Rob Liefeld Drawings (the funniest article ever written)

Contact

✉️ aryaman{}reddi{}tu-darmstadt.de

📍 E327, S2|02 Robert-Piloty-Gebäude, Technical University of Darmstadt, Darmstadt 64289